In the realm of Artificial Intelligence (AI), particularly in the context of Large Language Models (LLMs), tokens are the fundamental units of language that enable machines to comprehend and generate human-like text. This article delves into the world of tokens, exploring their definition, history, types, and significance in the AI landscape.

What are Tokens?

In AI, a token is a single unit of text, such as a word, character, or subword, serving as the basic building block of language. Tokens are the atomic units that LLMs use to process, analyze, and generate text. Think of them as the LEGO bricks of language, where each brick (token) can be combined with others to form meaningful structures (sentences, paragraphs, etc.).

Why Do Tokens Exist?

Tokens exist to provide a way for machines to represent and process human language. Human language is complex and nuanced, with words, phrases, and sentences that convey meaning and context. Tokens simplify this complexity by breaking down language into smaller, manageable units that can be processed and analyzed by machines.

A Brief History of Tokens

The concept of tokens dates back to the early days of computer science, when researchers were exploring ways to represent and process human language. One of the earliest tokenization algorithms was developed in the 1950s by the linguist and computer scientist, Noam Chomsky. Chomsky’s algorithm, known as the “Chomsky Normal Form,” was used to parse and analyze the structure of sentences.

In the 1980s, the development of the “bag-of-words” model revolutionized the field of natural language processing (NLP). The bag-of-words model represented text as a collection of individual words, without considering the order or context of the words. This model was widely used in early NLP applications, such as text classification and information retrieval.

Modern Tokenization

In recent years, the development of deep learning techniques and large language models has led to the creation of more sophisticated tokenization algorithms. One of the most popular tokenization algorithms is the “WordPiece” algorithm, developed by Google in 2016. WordPiece tokenization represents text as a sequence of subword tokens, which are smaller units of text that are derived from words.

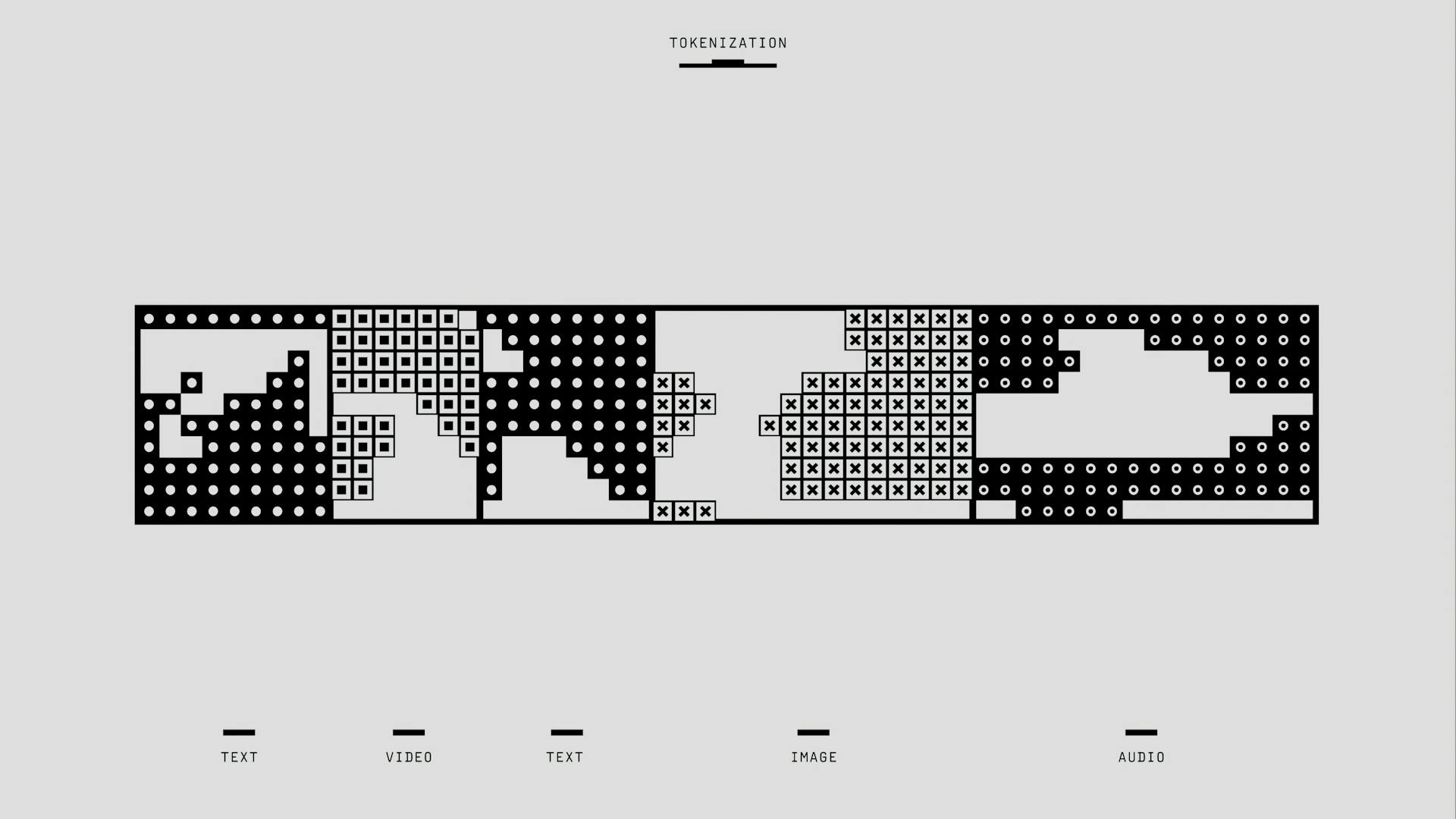

Types of Tokens

There are several types of tokens, each with its unique characteristics and uses:

- Word Tokens: These represent individual words in a sentence. For example, in the sentence “The quick brown fox jumps over the lazy dog,” each word is a separate token.

- Character Tokens: These represent individual characters, such as letters, numbers, or special characters. Character tokens are often used in tasks like language modeling, where the model needs to predict the next character in a sequence.

- Subword Tokens: Subword tokens are smaller units of text that are derived from words. They are often used in languages with complex morphology, such as German or Russian. For example, the word “unbreakable” can be broken down into subword tokens like “un,” “break,” and “able.”

- Byte-Pair Encoding (BPE) Tokens: BPE is a tokenization algorithm that represents text as a sequence of subword tokens. BPE tokens are commonly used in LLMs, as they provide a good balance between word-level and character-level representations.

Tokenization Example

Consider the text: “The quick brown fox jumps over the lazy dog.” Using the WordPiece tokenization algorithm, we can break down this text into the following tokens:

•“The” (1 token)

•“quick” (1 token)

•“brown” (1 token)

•“fox” (1 token)

•“jumps” (1 token)

•“over” (1 token)

•“the” (1 token)

•“lazy” (1 token)

•“dog” (1 token)

This text is composed of 9 tokens, each representing a single word in the sentence.

Why Tokens are Used to Meter the Usage/Cost of LLMs

Businesses charge by tokens primarily due to the need for a scalable, predictable, and efficient pricing model that aligns with the usage of computational resources in services like Large Language Models (LLMs). Here are the key reasons why token-based pricing is prevalent:

1. Reflects Computational Costs

Resource Allocation: The processing of requests in LLMs is directly tied to the number of tokens involved. Each token requires computational resources for processing, and charging by tokens allows businesses to align their pricing with the actual resource consumption. This means that tasks requiring more tokens, which are computationally heavier, will naturally incur higher costs for users.

Predictability: By using tokens, businesses can provide a clear and predictable pricing structure. Users can estimate costs based on their expected usage, making budgeting easier. This is particularly useful in environments where users may have varying levels of usage, such as in API calls or model interactions.

2. Flexibility and Scalability

Adaptable to Different Use Cases: Token-based pricing accommodates a wide range of applications and user needs. For example, different tasks (like text generation vs. summarization) may consume different amounts of tokens, and a token system allows businesses to adjust pricing according to the specific demands of each task.

Scalable Model: As businesses grow and their user base expands, token-based pricing can scale effectively. Users can purchase tokens in bulk or subscribe to plans that fit their usage patterns, allowing businesses to cater to both small developers and large enterprises efficiently.

3. Simplifies Billing and Usage Management

Standardization of Services: Tokenization standardizes the consumption of services, making it easier for businesses to manage billing. Just as prepaid mobile plans work, users can buy a set number of tokens that they can spend over time, simplifying the transaction process and reducing administrative overhead.

Control Over Costs: Users can monitor their token usage and manage their spending more effectively. This transparency helps in preventing unexpected costs, as users can see how many tokens they have consumed and how many remain.

4. Encourages Efficient Usage

Incentivizes Optimization: By charging per token, businesses encourage users to optimize their interactions with the model. Users may refine their queries or requests to minimize their usage, leading to more efficient use of the service overall.

Focus on Value: Token-based pricing allows businesses to focus on the value delivered to users rather than the underlying computational complexity. This approach can be more appealing to customers, as they are often more concerned with the outcomes of their interactions than the technical details of how those outcomes are achieved.

In summary, charging by tokens provides a structured, predictable, and scalable approach to pricing that aligns closely with the computational resources consumed by various tasks in LLMs. This model benefits both businesses and users by simplifying billing, encouraging efficient usage, and allowing for flexibility in service offerings.

Challenges of token based pricing

Customers face several challenges when using a token-based metering system for Large Language Models (LLMs). These challenges can complicate their experience and impact their overall satisfaction with the service. Here are the main issues identified:

1. Complexity in Understanding Pricing

Confusion Over Token Consumption: Customers may struggle to understand how many tokens their inputs will consume, as tokenization can vary significantly between different models and providers. This variability can lead to unexpected costs if users are not familiar with the tokenization process and how it affects their usage.

Estimating Costs: Predicting expenses based on token usage can be difficult. Without a clear understanding of how tokens translate into costs, customers might find it challenging to budget effectively for their usage, leading to potential overspending.

2. Variability in Tokenization Methods

Inconsistent Token Definitions: Different LLM providers may implement varying tokenization methods, which can result in different token counts for the same input text. This inconsistency complicates comparisons between services and can lead to confusion about which provider offers the best value for money.

Impact on Performance: The effectiveness of a model can be influenced by its tokenization strategy. If customers are unaware of these differences, they may inadvertently choose a model that does not meet their needs, affecting the quality of their outputs.

3. Budgeting and Financial Management

Unpredictable Costs: Fluctuations in token usage can lead to unpredictable billing, making it difficult for businesses to manage their budgets. If usage spikes unexpectedly, costs can escalate quickly, which may strain financial resources.

4. Limitations on Input Size

Token Limits: LLMs have maximum token limits for inputs and outputs, which can restrict the amount of text that can be processed at one time. This limitation - called context window - may hinder users working with large documents or complex queries, as important contextual information may be lost if it exceeds the token limit.

Truncation of Inputs: When inputs exceed the token limit, they may be truncated, leading to incomplete processing and potentially affecting the quality and relevance of the model's output. Users must be aware of these limits to optimize their interactions with the model effectively.

Conclusion

In summary, token-based metering systems for LLMs offer a structured approach to billing but they also present challenges related to pricing complexity, variability in tokenization, budgeting difficulties, and limitations on input size. Understanding these challenges is crucial for customers to navigate the token economy effectively and make informed decisions about their usage of LLM services.

Unlock the Future of Business with AI

Dive into our immersive workshops and equip your team with the tools and knowledge to lead in the AI era.