The ARC Prize is a $1,000,000+ public competition aimed at advancing open-source progress towards Artificial General Intelligence. The ARC Prize is a competition designed to inspire new ideas and drive progress towards Artificial General Intelligence (AGI) by reaching a target benchmark score on the ARC-AGI (Abstraction and Reasoning Corpus for Artificial General Intelligence) benchmark. The goal of the ARC-AGI benchmark is to measure how well an AI system can generalize on novel tasks, which is considered a key aspect of intelligence.

Here are some key details about the ARC Prize:

- The ARC Prize 2024 aimed to achieve an 85% score on the ARC-AGI private evaluation set. The competition ran from June to November 2024, with prizes including a grand prize of $600,000 for the first team to reach the 85% target, as well as other prizes for progress and paper submissions.

- The competition was run on Kaggle, where participants attempted to solve 100 tasks from the ARC-AGI private evaluation set on a virtual machine with limited resources. Participants had to open-source their solutions to be eligible for prizes.

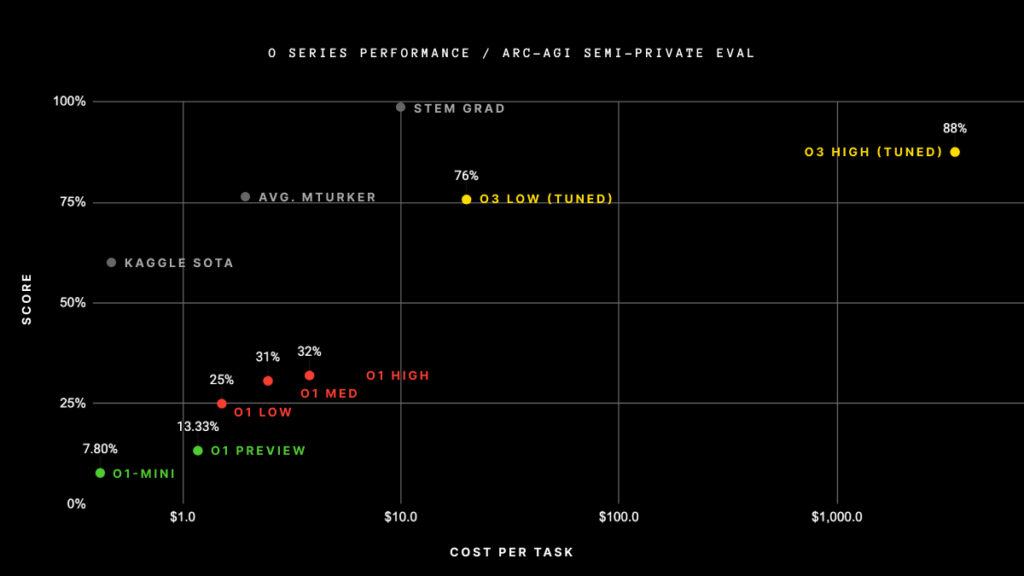

- The highest score achieved during the 2024 competition was 55.5% by MindsAI, though they were not eligible for a prize due to not open-sourcing their solution. The score was beat by o3 from OpenAI achieving 75.7% at the end of 2024, indicating that the benchmark itself might be saturated in the very near future.

- The competition also featured a secondary public leaderboard with relaxed compute constraints and internet access. This leaderboard was used to evaluate performance using commercially available APIs.

- The ARC Prize also included "Paper Awards" to reward novel concepts, regardless of the scores achieved, with prizes awarded to several papers describing new techniques.

- The ARC Prize is intended to be an annual competition until the ARC-AGI benchmark is defeated and a public reference solution is shared. The organizers plan to redesign the 2025 competition based on lessons learned from the 2024 event.

- The ARC Prize has inspired the development of various tools, datasets, and repositories to support research and development related to ARC-AGI, including domain-specific languages, data generation frameworks, and interactive web tools.

Allover, the ARC Prize seeks to promote open research in AGI, given that much of frontier AI research is no longer published by industry labs. The goal is to encourage researchers to develop new techniques and openly share them with the community. The competition is also intended to help improve the ARC-AGI benchmark itself.

How does the ARC Price work?

The ARC-AGI (Abstraction and Reasoning Corpus for Artificial General Intelligence) benchmark is designed to measure general intelligence and skill-acquisition efficiency in AI systems. Here's how it works:

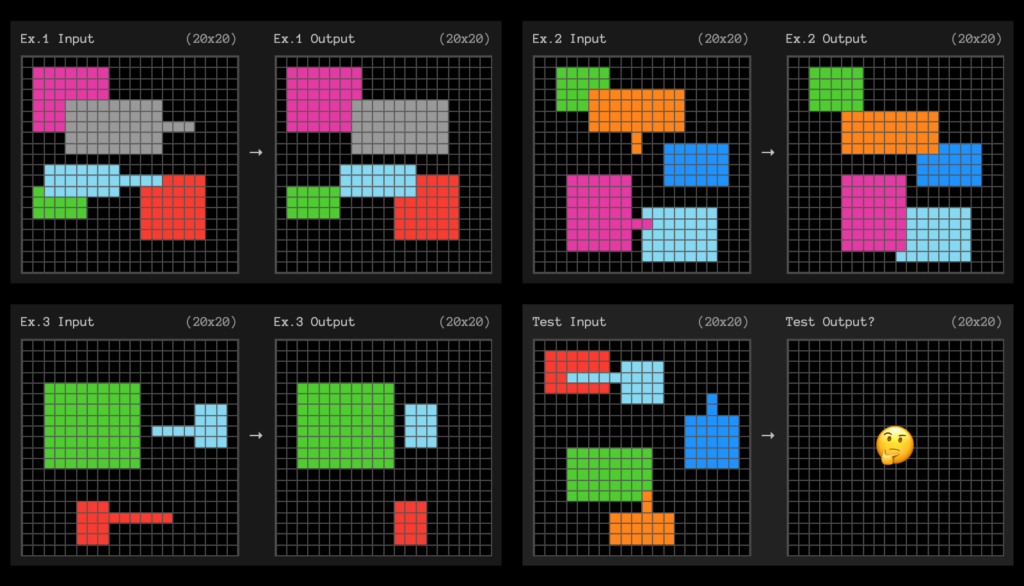

Task Structure

- Each task in ARC-AGI consists of input-output examples presented as grids.

- The grids can be any size from 1x1 to 30x305.

- Each square in the grid can be one of ten colors.

Evaluation Process

- To solve a task, the AI system must produce a pixel-perfect, correct output grid for the evaluation input, including determining the correct dimensions of the output grid.

- The benchmark includes public training and evaluation sets, as well as a private evaluation set.

- The public training set contains 400 task files for algorithm training.

- The public evaluation set also contains 400 task files for testing algorithm performance.

- The private evaluation set, used for the official leaderboard, contains 100 task files.

Scoring

- Performance is measured by the percentage of correct predictions on the private evaluation set (100 tasks).

- For each task, the system must predict exactly 2 outputs for every test input grid.

- A task is considered solved only if the predicted output matches the ground truth exactly.

Key Features

- Novel Tasks: Each task in the dataset follows a different logic, ensuring that systems cannot be prepared for specific tasks in advance.

- Core Knowledge Priors: The benchmark assumes only basic prior knowledge that humans typically acquire before age four, such as objectness, basic topology, and elementary integer arithmetic.

- No Specialized Knowledge: Tasks do not require specialized world knowledge or language skills to solve.

- Human Verification: All tasks have been verified by at least two STEM professionals to ensure solvability by humans1.

Benchmark Difficulty

- As of 2024, the state-of-the-art AI performance on ARC-AGI is 55.5% on the private evaluation set.

- Human performance averages between 73.3% and 77.2% correct on the public sets.

By focusing on skill-acquisition efficiency rather than task-specific performance, ARC-AGI aims to provide a more accurate measure of general intelligence in AI systems.

Why is solving arc puzzles difficult for AIs?

Solving ARC puzzles is particularly challenging for AI systems due to several key factors:

Complexity of Problem-Solving

- Few-Shot Learning Requirement: ARC puzzles are designed to assess an AI's ability to generalize from a limited number of examples (3-5). This few-shot learning requirement means that AI models cannot rely on extensive training data or memorization, which are common strategies in traditional machine learning. Instead, they must extract underlying principles and apply them to novel situations, similar to how humans learn.

- Resistance to Memorization: The tasks are specifically crafted to resist simple memorization strategies. They often require understanding abstract concepts and relationships rather than just recognizing patterns from previous examples. As François Chollet, the creator of the ARC benchmark, noted, these tasks are easy for humans but difficult for current AI systems because they do not involve complex knowledge but rather the ability to reason and adapt.

Nature of the Puzzles

- Abstract Reasoning: The puzzles require a deep understanding of abstract reasoning, logic, and sometimes even physics. AI must recognize patterns and apply logical deductions based on given examples, which can be significantly more complex than it appears at first glance.

- Variety of Tasks: Each puzzle presents a distinct learning problem, making it difficult for AI to apply a single strategy across different tasks. This diversity in problem types forces AI systems to develop flexible reasoning capabilities rather than relying on fixed algorithms or heuristics.

Limitations of Current AI Models

- Lack of True Understanding: Many modern AI systems, including large language models (LLMs), primarily operate through advanced memorization and statistical correlation rather than genuine understanding. This means that while they can perform well on specific tasks with large datasets, they struggle with the abstract reasoning required for ARC puzzles.

- High Error Rates: Even with sophisticated approaches like brute-force searching or minimum description length strategies, current AI solutions have shown limited success on ARC tasks. For instance, the best-performing models have only achieved around 34% accuracy on these puzzles (o3 being the notable exception at the end of 2024), while human performance averages between 85% and 100%.

In summary, the combination of few-shot learning requirements, resistance to memorization, the need for abstract reasoning, and the limitations of current AI models contribute to the difficulty AIs face in solving ARC puzzles. These challenges highlight the gap between human cognitive abilities and current artificial intelligence capabilities in achieving true generalization and reasoning skills.

This gap is narrowing rapidly, and by 2025, a new version of the benchmark—ARC-AGI-2—will be introduced. In development since 2022, it aims to redefine the state-of-the-art. Its goal is to drive AGI research forward with rigorous, high-impact evaluations that expose the current limitations of AI systems. Preliminary testing of ARC-AGI-2 indicates it will be both valuable and extremely challenging, even for advanced models like o3. The launch of ARC-AGI-2 in tandem with the ARC Prize 2025, is anticipated for late Q1.

Unlock the Future of Business with AI

Dive into our immersive workshops and equip your team with the tools and knowledge to lead in the AI era.